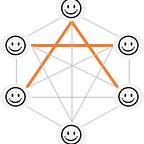

A Decentralized Sense-To-Sense Network

[P-S Standard]

Egger Mielberg

egger.mielberg@gmail.com

Abstract.

A purely decentralized Internet would allow its users to create or get access to a public or private informational worldwide network with a guarantee not to be spammed, interrupted or attacked by a third party at all.

Semantic Normalizer (SN) would improve the user’s Internet search experience and diminish search time greatly. SN eliminates double- answering and double meaning problems. It is a part of the architectural solution of ArLLecta and requires no additional pre- installations.

We propose a solution to the nontransparent and domain-centered Internet problem using a decentralized sense-to-sense network. S2S network allows creating public or private zones for business or personal needs. The data of each user, individual or corporate, is decoded and published only by direct permission. The architecture of S2S network prevents the centralization of

its data by a single user.

However, each user can create or join or leave any zone.

The main task of the S2S network is to give each user a possibility for a quick sense-focused search and save its data from unauthorized third parties.

1. Introduction

We are pretty much confident that there is no perspective practical possibility for achieving a sense-focused result as soon as the man is required to fill some tag-based website field. The introduction and usage of meta-tags of the HTML webpage prove this statement.

The structure of a digital document is usually updated on a regular basis. With updating, the sense of a single phrase or sentence can be changed significantly. Therefore, the mechanism of sense searching and associations between network content must exist and be turned on dynamically.

2. Problem

In an attempt to create artificial intelligence, humankind face with tons of problems that look unfeasible. Many worldwide engineers and IT specialists focus primarily on standardization of the presentation of digital data.

However, the core principles of forming human knowledge do not follow a predetermined pattern or mental template.

In the context of the World Wide Web, there are three key technologies (standards) that might be the main barrier for creating a Truly Intellectual and Self-Learning Internet for humans and machines as well. Here are URI, HTTP, and HTML.

URI.

URI has two forms of presentation.

URN identifies a resource by name in a specific namespace. For example, URN for a book is specified by its unique edition number. Whereas, URN for an electronic device is specified by its serial number, etc.

The problem is that URN gives no information about:

1. associative relationship between two or more URNs.

2. existing duplicates of a unique URN.

3. location of a specific URN.

4. the authenticity of a URN.

5. area of use of a URN.

URL specifies both the access mechanism and network location. For example, URL such as http://www.example.com/author/main_page.html specifies location “www.example.com/author/main_page.html" accessed by the mechanism (protocol) “HTTP”.

The problem is that URL gives no information about:

1. the degree of data relevance of a URL.

2. associative relationship between two or more URLs.

3. area of use of a specific URL.

4. authenticity and ownership of a URL.

5. semantic weight of a specific URL towards other URLs.

In other words, besides the name of a data resource and its network address, URN, as well as URL, describe nothing semantic or associative that might be useful for qualitative analytics or predictive prognoses.

HTTP.

First, HTTP is a client-server protocol. It allows a third party server to store personal user data. In other words, there is no chance for a single user to get information nowadays on the Internet without sharing its data.

Second, the main resource HTTP works on is URI. As we already know, URI does not provide any sense-focused or resource-to-resource related data. It works by only names and locations.

Third, HTTP-message consists of three main parts: starting line, headers, and message body. The headers cover a number of functionalities among which resource type, encoding, authorization, cache-control, range, location, etc. But it still lacks a mechanism for sense-disposition between different HTTP-queries (do not be confused with the content-disposition header).

Besides abovesaid, HTTP is the very overloaded protocol and does not have an architectural perspective for the determination of URI authenticity.

HTML.

HTML is a tag-based markup language. It provides a means to create a structured document by denoting structural semantics for text such as headings, paragraphs, lists, links, quotes, and other items. Each HTML document can have such meta-tags as keywords and description. These meta-tags define the meta-data of the website. Tags and meta-data were intended to help classify websites and their data by topic, subject, sense, etc. But the problem is that HTML document still:

1. does not separate internal website data by topic

2. does not give a mechanism for clear and correct website description

3. does not realize a sense-to-sense approach between internal website data and external related links as well.

One of the non-commercial organizations (W3C) recommends a solution (RDF) for “representing metadata about Web resources, such as the title, author, and modification date of a Web page, copyright and licensing information about a Web document, or the availability schedule for some shared resource” [5]. “RDF is based on the idea that the things being described have properties which have values…”. The organization uses the triple-based concept “subject-predicate-object”.

For more information, read pdf-format file >>>