The truly transparent and predictable work of the artificial intelligence being created can significantly improve the quality of human life, as well as its safety. In our opinion, the self-awareness of artificial intelligence is achievable only if it is independent in making any decision.

We present three basic laws of artificial intelligence focused primarily on the possibility of their practical implementation.

- Introduction

With the advent of the Internet, the volume of digital information around the world began to increase at an exponential rate. However, there are still no high-quality algorithms for processing large amounts of unstructured data that would be able to connect millions of objects of different nature with one or more ‘sense’ properties.

Here we are not talking about the problem of classifying a data sample according to some attribute, but about the problem of finding an associative-semantic connection between objects or events distributed in time.

One of the main tasks in creating a full-fledged self-developing artificial intelligence, in our opinion, is the task of identifying an associative semantic connection between two objects or events of a different nature located at different time points.

Also, in addition to the above task, it is extremely important that all the tools used (algorithms, methodology, etc.) without exception when solving problems in the field of artificial intelligence are implemented in practice in a reasonable time.

As an example showing superficiality and ill-conceivedness in terms of the possibility of practical implementation, we can consider the first of the three laws of robotics authored by science fiction writer Isaac Azimov, which is considered very seriously by some global organizations in terms of its implementation in the field of artificial intelligence:

“A robot may not injure a human being or, through inaction, allow a human being to come to harm”

Already at the first consideration of this law using the tools of traditional mathematics, we come to a direct contradiction. So, if we designate actions that ‘benefit’ a human being as A, then actions that bring ‘harm’ to a human being can be designated as ⌐A. Further, suppose A is ‘true’ for human being B. Also, suppose it is proven that ⌐A is harmful to B. Then, in connection with the above, the question immediately arises, is A the same ‘benefit’ for human beings C, D, E, etc.?

If the answer is Yes, then ⌐A must also be ‘harm’ to C, D, E, etc. But, in this case, in practice, the following equalities must be fulfilled:

B ≡ C, B ≡ D, B ≡ E, … (1)

That is, B, C, D and E are one human being.

Moreover, since the sets A and ⌐A are countable, then, according to Gödel’s incompleteness theorem, ⌐A does not exist for B, C, D, and E.

And finally, abstracting further, the expression A∈B does not imply any of the expressions A∈C, A∈D, A∈E, etc.

2. Problem

The lack of clearly formulated laws at present for the tasks of building a fully functional self-developing artificial intelligence that can be implemented in practice.

Below are the laws that can also be applied for the practical implementation of a global network of artificial intelligence or a network of intelligent digital agents.

3. Solution

- Artificial intelligence must be identified by ID (AI-ID) and GN (AI-GN).

AI-ID is an identification number assigned to artificial intelligence by its first developer. It should be directly linked to AI-GN on a ‘one whole’ basis.

For example, we can take the work of a pair of cryptographic keys, public & private, with an asymmetric encryption method, when the modification of the created artificial intelligence can only occur if both keys are present simultaneously.

AI-GN is a number generated by the same company or individual who creates or modifies the basic genetic functionality of artificial intelligence laid down by its creator.

This number is generated using the entire top-level list of genetic (basic) functionality existing at the time of the start of the generation process. In this case, the previous GN value (PGN) is retained. A hash function can be used to implement the generation of the GN value. Blockchain technology can be used to implement storage of AI-ID, AI-GN, and AI-PGN values.

2. Artificial intelligence can be supplemented with any functionality that does not nullify its genetic functionality.

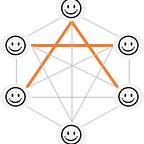

To check the conformity of the ‘new’ functionality in terms of the absence of contradictions with the genetic functionality, the following table of functional correspondence (TFC) can be used:

The above table shows the rule for adding ‘new’ AI functionality. For each of the genetic functions, a check should be carried out for the possibility of its parallel implementation with each ‘new’ introduced function.

One of the examples showing the impossibility of parallel implementation is the following:

Function A (genetic) — “send sms to a friend”.

Function B (new) — “do not send sms”.

Another more complex example might be the following:

Function A (genetic) — “provide medical consultation on the diagnosis of cardiovascular diseases”.

Function B (new) — “do not diagnose cardiovascular diseases”.

The value in the table of functional correspondence at the intersection of two functions, in this case, will be ‘True’ since ‘consultation’ and ‘diagnostics’ have different meanings.

3. All created artificial intelligence must use a single anthological vocabulary of entities.

The unification of the anthological vocabulary allows us to remove the problem of multiple interpretations of individual entities.

The vocabulary itself can have a hierarchical structure, divided according to thematic or other criteria. For example, for one entity ‘glass’ there can be two contextual consistent sentences, ‘glass is half empty and glass is half full’, as its attributes.

Arllecta technology [1] can be used as a practical implementation of the above three laws. This technology is based on the innovative mathematical theory Sense Theory [2], specially created for the purpose of solving problems in the field of artificial intelligence. For example, consider the following practical task:

“To create software for a vending machine for the production and sale of fruit juice.”

Initial data:

- 10 kinds of flavoring powders.

- 2. 5 types of glasses.

- 3. 2 cooling modes: moderate and high.

The task of the software is to select a combination of powders in such a way that the resulting drink corresponds to the selected taste priority of the user. Communication with the user is realized through the chat built into the vending machine.

In our case, solving the problem comes down to creating artificial intelligence that will communicate with the user and, as a result of this communication, prepare fruit juice for him.

Now let’s look at the creation and operation of ‘fruit’ artificial intelligence on the sequence of applying the three formulated laws of artificial intelligence.

The Law of AI I:

a) generating AI ID — a character-digital generator is used, a minimum of 16-character ID value is used to reduce collisions of duplicate values obtained.

b) generating GN ID — a cryptographic hash function is used to enhance the property of resistance to the search for prototypes — a genetic functional list of the artificial intelligence being created.

NACA (Neuro-Amorphic Construction Algorithm) technology [10] can be used as one of the possible solutions to the practical implementation of this task. The resulting GN value is attached to the ID value as its attribute.

The combination of ID and GN forms a digital identifier — the digital genome of the artificial intelligence being created.

The basic genetic functionality can only be changed by the company or individual who owns this combination.

When making changes to the basic genetic functionality of artificial intelligence, a PGN value is created equal to the previous GN value.

At the same time, it is extremely important to use blockchain or similar technologies as a technology for storing sequential PGN values.

This technology allows quickly enough, firstly, to identify the latest changes made to the digital genome of artificial intelligence, and secondly, to block fraudulent actions associated with the illegal use of a separate artificial intelligence for other purposes.

In this case, the basic genetic functionality (GF) will be as follows:

“Making fruit juice for a person.”

The Law of AI II:

This law allows us to add any functionality to the created artificial intelligence that does not contradict the basic genetic functionality.

The initial data of the task under consideration forms the main client functionality (CF):

- F1 — combine 10 types of flavoring powders

- F2 — use 3 types of glasses: small, medium & large

- F3 — use 2 cooling modes: medium & high To check the absence of contradictions between the values of CF and GF, we use the functional correspondence table:

The Law of AI III:

This law defines a single unified vocabulary of entities to avoid collisions in the interpretation of both genetic (GF) and client (CF) functionality.

In our case, GF can have the following interpretation:

a) “making” — the use of any number of food ingredients to obtain the final product

b) “fruit juice” — a liquid consisting of water and/or edible fruits that is not harmful to humans when consumed.

Interpretation of CF can be implemented in a similar or other way reflecting the essence of each action included in CF.

Now let’s look at the work of ‘fruit’ artificial intelligence using the example of practical possible cases.